If you ask around, there are very few who are really completely in the cloud – and one who is here in Munich is Mihai Satmarean. He earned his VFX spurs at Trixter as Head of IT and has also worked at Dassault Systems Mindware and DevOps. You can find an up-to-date overview of his projects here: is.gd/mihai_imdb or for more information visit candr.me.

DP: Hello Mihai! Why did you develop C&R.me?

Mihai Satmarean: It was an idea I had been toying with since my early days in the studios, given the inflexibility of local data centres and many other limitations. I had been studying parallel computing and trying to understand networking and storage in a hybrid cloud/on-premises environment. And Corona accelerated all that and even made the pure cloud approach the number one priority.

I was very inspired by the technology of Bernie Kimbacher, who has published some tutorials together with AWS. The main proponent of the idea in practice was a former Trixter colleague called Adrian Corsei, who was Head of Studio at Orca at the time of the discussion and called me at some point asking about the possibility of creating a complete virtual studio.

I saw the opportunity to bring together all the previous work and ideas into a functioning PoC. So we put our heads together virtually, and within no time we had something running on AWS. It was all remote and asynchronous. Eight months later, we met in person for the first time.

There has been a trend for some time now to move as much infrastructure as possible to the cloud, especially if there is not much hardware “on-prem”. Mainly for reasons of flexibility, scalability and to avoid large upfront investments. Building infrastructure in the cloud makes it possible to link costs directly to projects and to scale the infrastructure with the project(s) with minimal upfront investment. Corona, working from home and supply chain issues have accelerated this idea, but I wouldn’t say they are the main reasons or drivers.

DP: What would that look like for an artist?

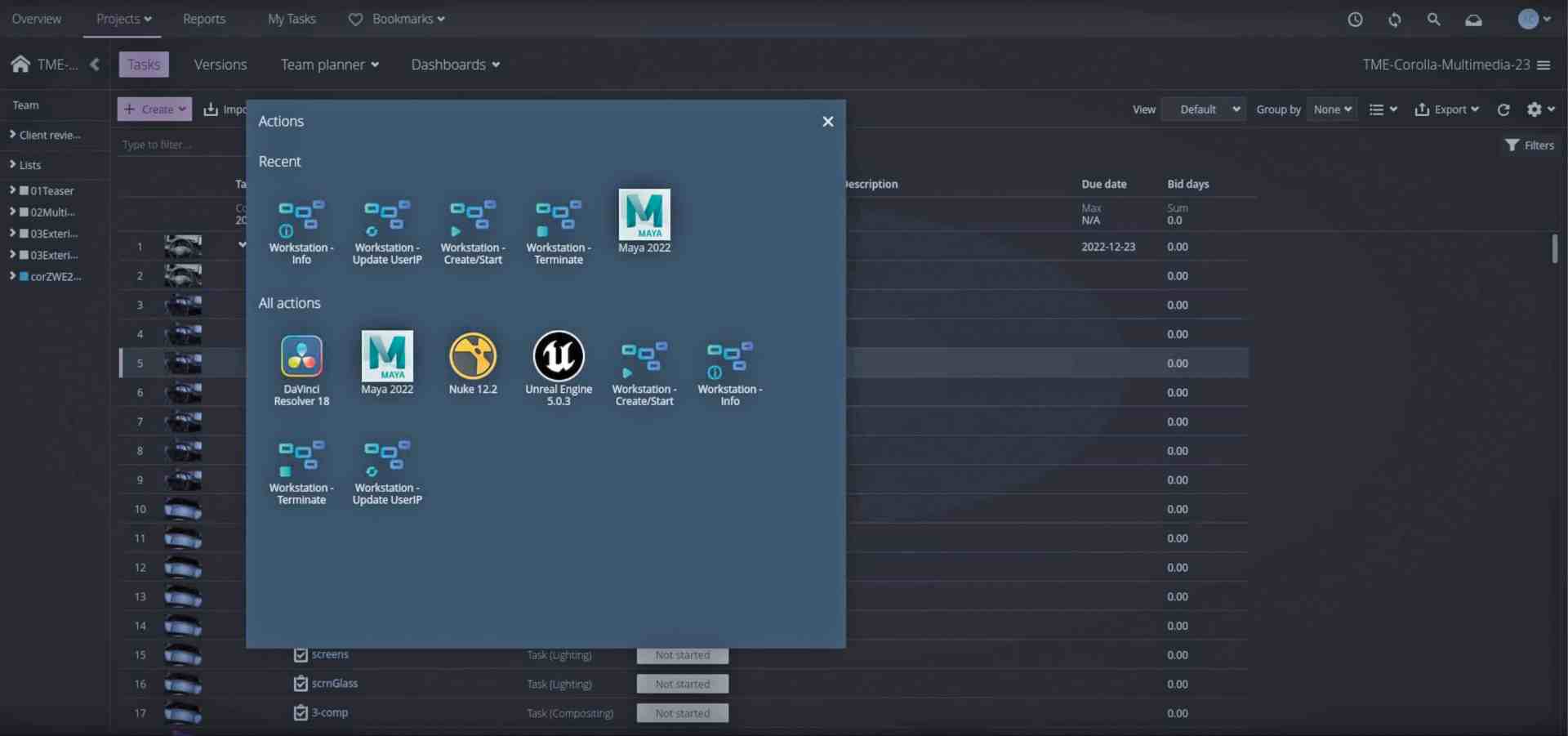

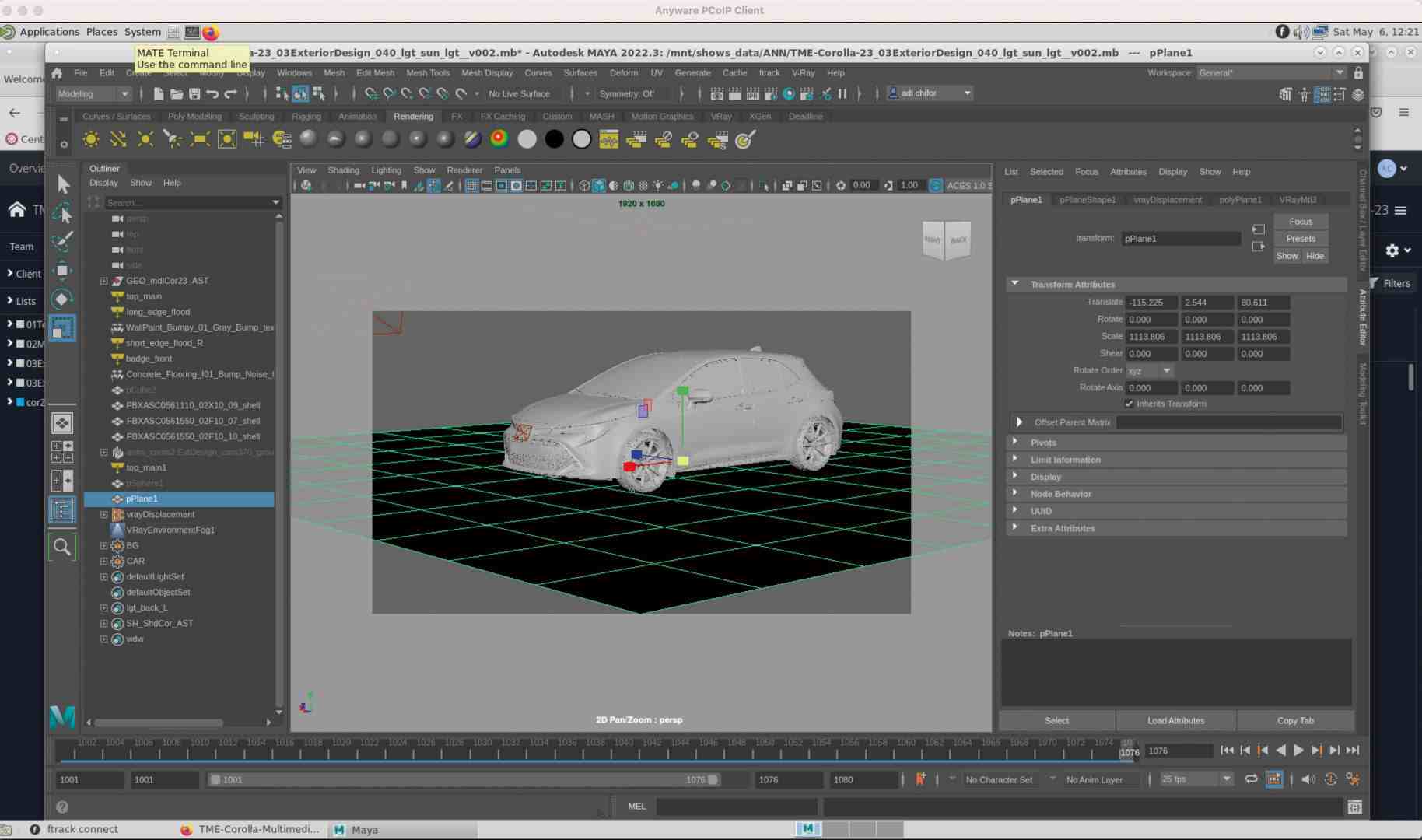

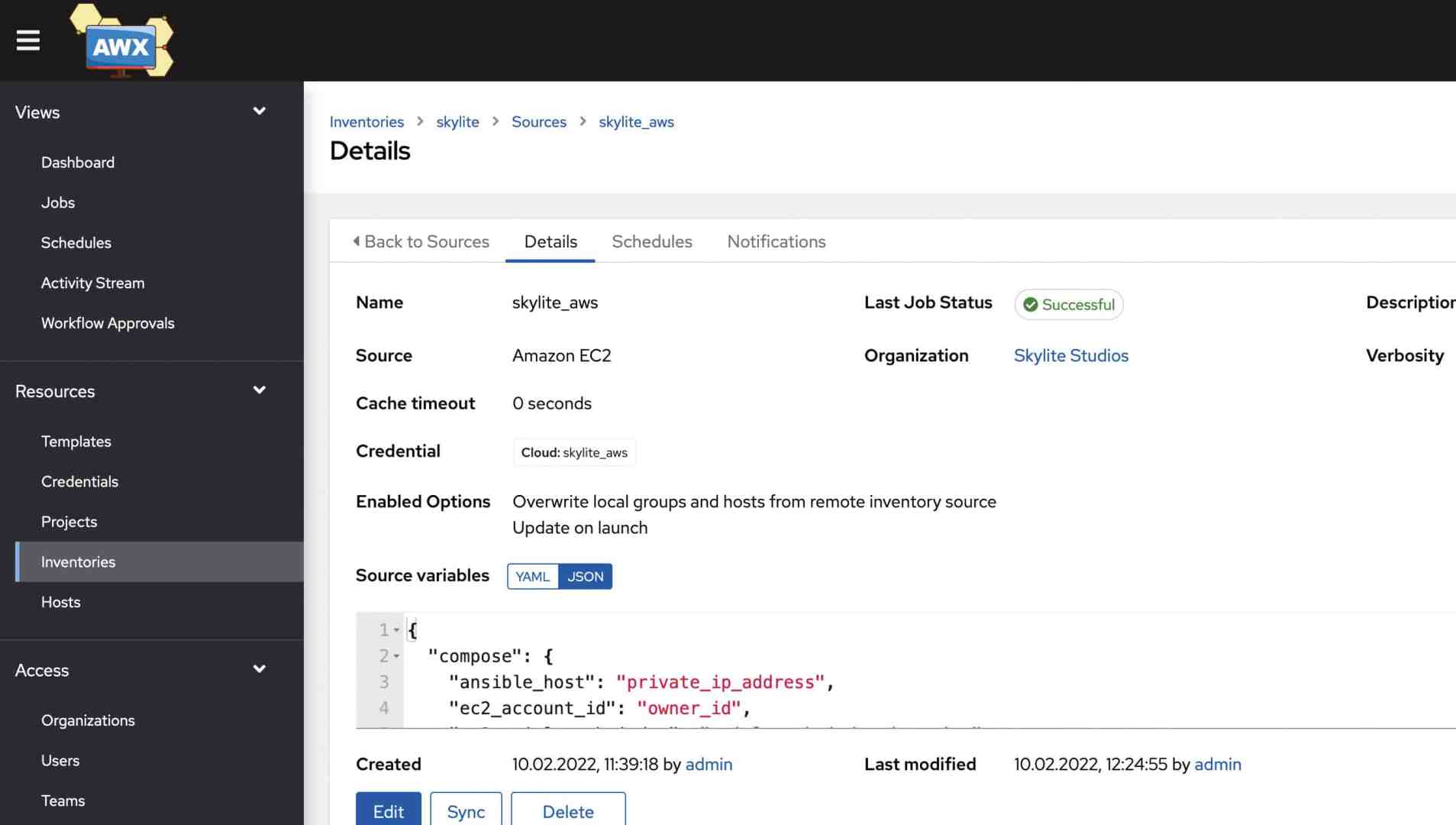

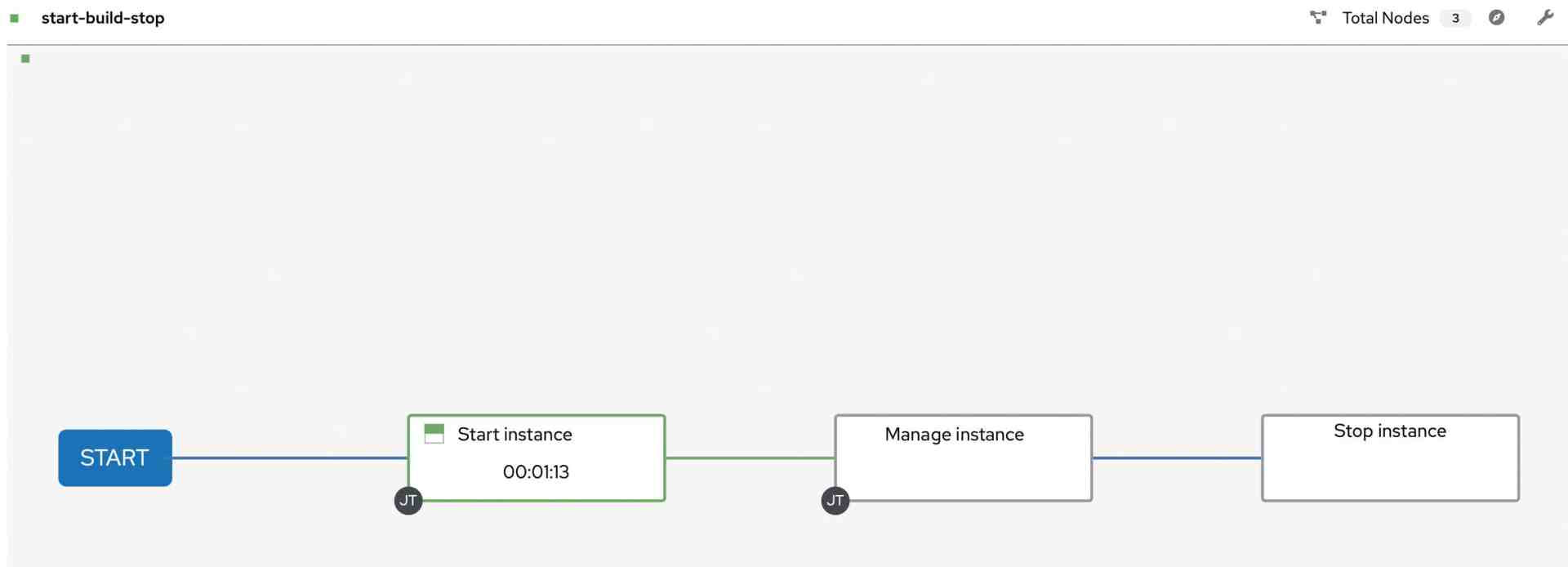

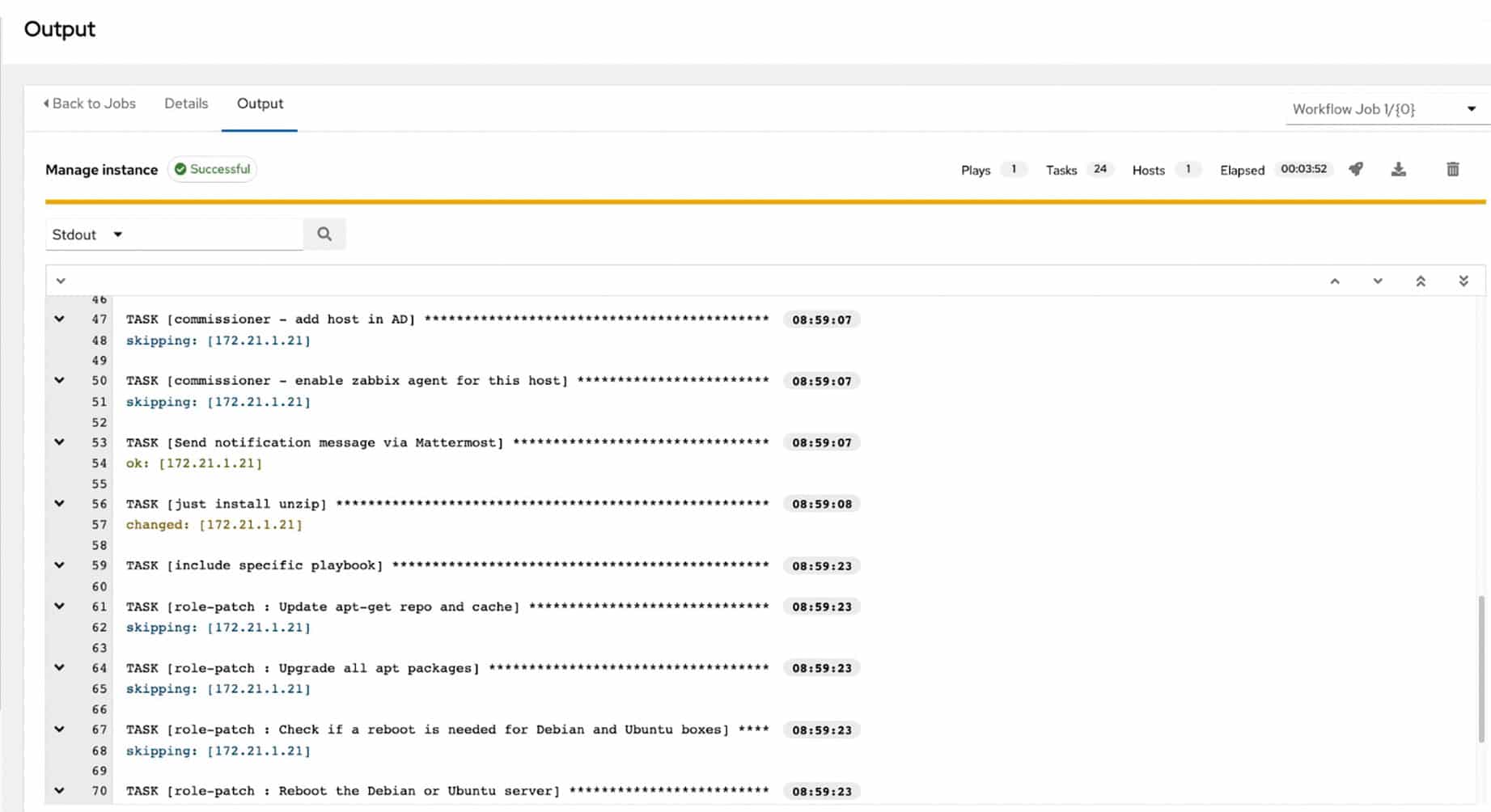

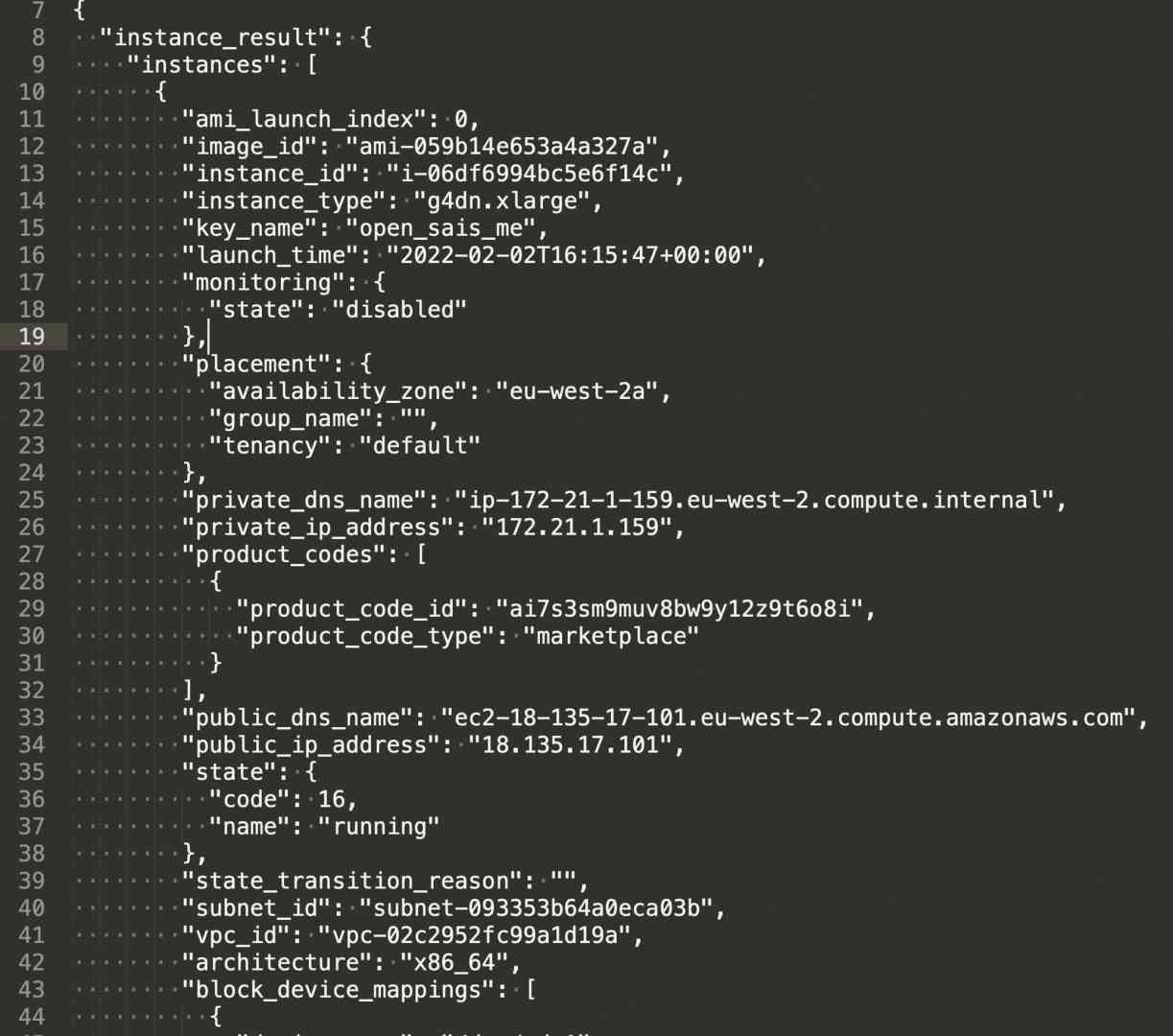

Mihai Satmarean: We can now offer better workstations – with C&R.me (The Configuration and Resources Management Engine), artists can set up their own workstations using the project management tool. It’s very different – instead of IT provisioning, configuring and handing over workstations, we now have a ‘black box API endpoint’ with coded provisioning instructions. Through some pipeline magic, our project management software, FTrack, can request workstations and return the details to the user. Teradici’s PCoIP protocol is used to connect to the remote (cloud) workstation’s screen(s). And for the artist, this means that they get an interface and “activate” a workstation and the rest is done automatically.

Artists have a local client for the protocol and can securely connect to the workstation’s PCOIP agent. The main difference for the artist is that they connect to the workstation via a remote desktop client such as Teradici rather than directly. This means that the quality of the connection, such as latency and bandwidth, has a big impact on the artist’s overall experience. This is of course easier to manage in an office environment than when the artist is working from home, but overall it gives the artist great freedom as they can access the same infrastructure both in the office and at home. In addition, the artist has full control over their machine(s) and can create, delete, wake up, shut down/restart or even switch between different machines depending on what they need them for.

DP: Has this actually been implemented or is it still in the planning phase?

Mihai Satmarean: No planning – we’re in the middle of it. It looks like this – I’ll take the last project with the Spanish Orca Studios. We started as an experiment, designed our API and built some infrastructure – amazingly, we soon had a proof of concept and launched a project. Then another and another, and we realised that we had a working design because the scalability of artists is infinite. I think we can now take on projects of any size.

There’s still a lot to do in terms of refactoring pieces of code, concepts, etc., but I think we now have an infrastructure as a service. Of course, that’s not all, we still need pipelines, artists, supervisors and other people, but the way it works today, it’s mainly DevOps and Infrastructure as Code – and with that we actually offer Infrastructure as a Service.

DP: …and someone has already done that?

Mihai Satmarean: Yes, of course. We had three projects where we did exactly that – of course in individual implementations, with Orca Studios, Nexodus and Skylite Films. For example, on “Women King”(is.gd/woman_king), Nexodus used our toolset to integrate more than 30 artists into their pipeline, all of whom were distributed – there was no central office. Another project called “Santo” by Orca Studios(is.gd/santo_orca) was realised in Spain with artists from all over Europe working on a TV series about a mysterious drug dealer. We also did other things with Orca, like “The Good Nurse”.

DP: What were the pipelines in these projects?

Mihai Satmarean: Tools were common programmes like Nuke, Maya and so on. So far we only had cloud-native studios, so not much other infrastructure. But we are currently working on a project where we connect to a small server for licences and other services.

DP: How long did the set-up time take for each project?

Mihai Satmarean: Depending on the complexity of the studio and the scope of their work, our PoC project took about half a year until we had something concrete. Currently, we could get a medium-sized studio on board as a proof of concept in less than a month.

DP: Can’t people just rent a cloud instance themselves?

Mihai Satmarean: The cloud providers are not made for “consumers” (or artists), but mainly for application and infrastructure developers. Theoretically, you can do everything yourself, but as with any DIY project, it depends on your skill level. With some of the tools we’ve developed, it’s much cheaper and quicker to involve us – or any other cloud IT expert – than to retrain yourself or your artists. We now have a combined expertise of over 100 years in IT and VFX. In other words, longer than IT has been around.

DP: If it’s a product – even a service – does it have a price?

Mihai Satmarean: We started it about two years ago, with four people working on it at least one day a week, until it became a full-time job from the first projects. As with any cloud project, the infrastructure costs are quite low at the beginning, as practically only the development costs need to be covered. Cloud providers also offer cost savings or “credits” for cloud migrations, etc. With this type of project, the costs increase with the size of the project (artists, farm, data, etc.). However, since every project is different, I can’t give a specific price for this – it depends on what you’re looking for.

DP: Assuming we bring C&R.me into a project – how long does it take before I can start working?

Mihai Satmarean: In our previous projects, we had a big migration that took about 2 months – today it would take a couple of weeks for a new start of a “Cr&Me-based studio”, not more. There are certain things that play a role – the current infrastructure in the studio, the complexity of the pipeline and where storage and libraries and licences are managed.

DP: Let’s talk about licences: What can I use besides Blender? Where will the licence servers be located and how will they communicate with the infrastructure?

Mihai Satmarean: Licences are still difficult to manage – like in traditional studios. AWS has done some good things there by making their tools available already licensed – which means Deadline is there. We run Mari, Substance, Nuke, Maya, 3DEqualiser, Houdini etc. more or less without any problems. But beware: some tools require a physical machine as a licence server, and apart from that we’ve only had problems with some Adobe products (if I remember correctly). But, from a bird’s eye view, it’s still difficult – there are regional restrictions, so the easiest way for the studio is to buy the licences – this works for rented licences too. Medium and larger studios usually already have these.

Some tools try to find the physical machine or its hardware – and that obviously doesn’t work. I don’t want to say what the problems are because the engineers have promised to fix it! But these are “man-made limitations”, not fundamental problems.

If you are a smaller studio, you may need to speak to your preferred reseller, for example Dve As(www.dveas.de) who can help you get things rolling because with most professional software suites, simply clicking the ‘buy’ button on the webshop is not enough. Another point – with the geographical restrictions – is to run certain parts via VPNs, and some tools are even “computer-bound” – in which case the artist has to do some administrative work if a change of computer is required.

DP: What are other restrictions?

Mihai Satmarean: Not many – we have frameworks to connect to other specialised cloud services. We even already have one, but we can’t talk about it publicly (yet). Over the last year we have met various providers of VFX-specific cloud tools – it depends on the type of tool/service and its maturity, but in most cases there is not much difference to on-premise installation. If specific hardware is required that comes with the service and the cloud provider does not have a solution for it, then of course not.

DP: What is needed in terms of preparation?

Mihai Satmarean: We currently rely on the Teradici PcOIP protocol, which was recently acquired by HP and renamed “HP Anywhere”, for which there are thin clients and zero clients. So far, out of 100 artists, only 2 have complained about slowness and it wasn’t even the bandwidth but the local LAN. For the artist it takes a minute to “switch on the machine” and then he or she can connect to any prepared, already running or “on demand” machine.

DP: Let’s be honest: cloud infrastructure depends on the ISP – does that even work in Germany?

Mihai Satmarean: The artist needs a decent connection, but nothing excessive and you shouldn’t be too far away from the infrastructure. Working on a thin client with a 14.4 modem from a suburb of Passau on a server in Calcutta is not so convenient – but would theoretically even be feasible (laughs).

Working on remote machines takes some time to get used to (some can handle it quickly and some just don’t like the idea and hold back). Also, some tasks require a better setup than others (modelling vs animation vs texturing etc).

Actual bandwidth also depends on screen size, for example. To give you a figure: Two 4K screens require more than one screen, and the “lowest” speed for working efficiently with two screens would be about a 1 Mbit connection. That seems low, but remember: Teradici compresses the images and only sends “updates” – for the most part. So you can get away with a lower connection. But faster is always better!

DP: But then you can’t quickly transfer the material from your computer to the cloud?

Mihai Satmarean: Your compositors deliver new film material? (Laughs) Storage is exclusively in the cloud, where we maintain two copies in different regions to back up and optimise delivery. This is because it can take more than a week to copy everything to a new region with VFX data volumes. With this approach, we could switch the entire production to another region almost instantly. And the artists hardly upload anything after the ingestion process.

DP: If this works, could you increase production more or less indefinitely?

Mihai Satmarean: Yes, you can add new artists and studios in a matter of minutes – for example, if you want to work in Nuke, you can bring a compositing specialist into the project with just a few clicks – the infrastructure behind it scales “with you”. What you don’t need costs nothing, and virtual machines are always available. And if they are not used, the virtual machines are just configuration files on a computer. For “networked productions” – as is often the case with European films – I think this is a great opportunity to reduce costs and invest the money in the most important thing – the artists.

can be addressed.

DP: And what are the next things that will be developed for C&R.me?

Mihai Satmarean: There are a few things on the agenda – Windows machines are difficult to automate (but this is getting better with recent updates), but most of the artist machines are already running on Linux – Centos, RockyLinux, Ubuntu etc. These are easy to automate and set up. These are easy to automate and set up. GPU compute time is still relatively expensive in the cloud – not compared to buying the hardware of course, but still a factor.

When we talk about the price of hardware: Different regions don’t always have the same types and quantities of machines as well as differences in pricing. This all plays a role in choosing a region.

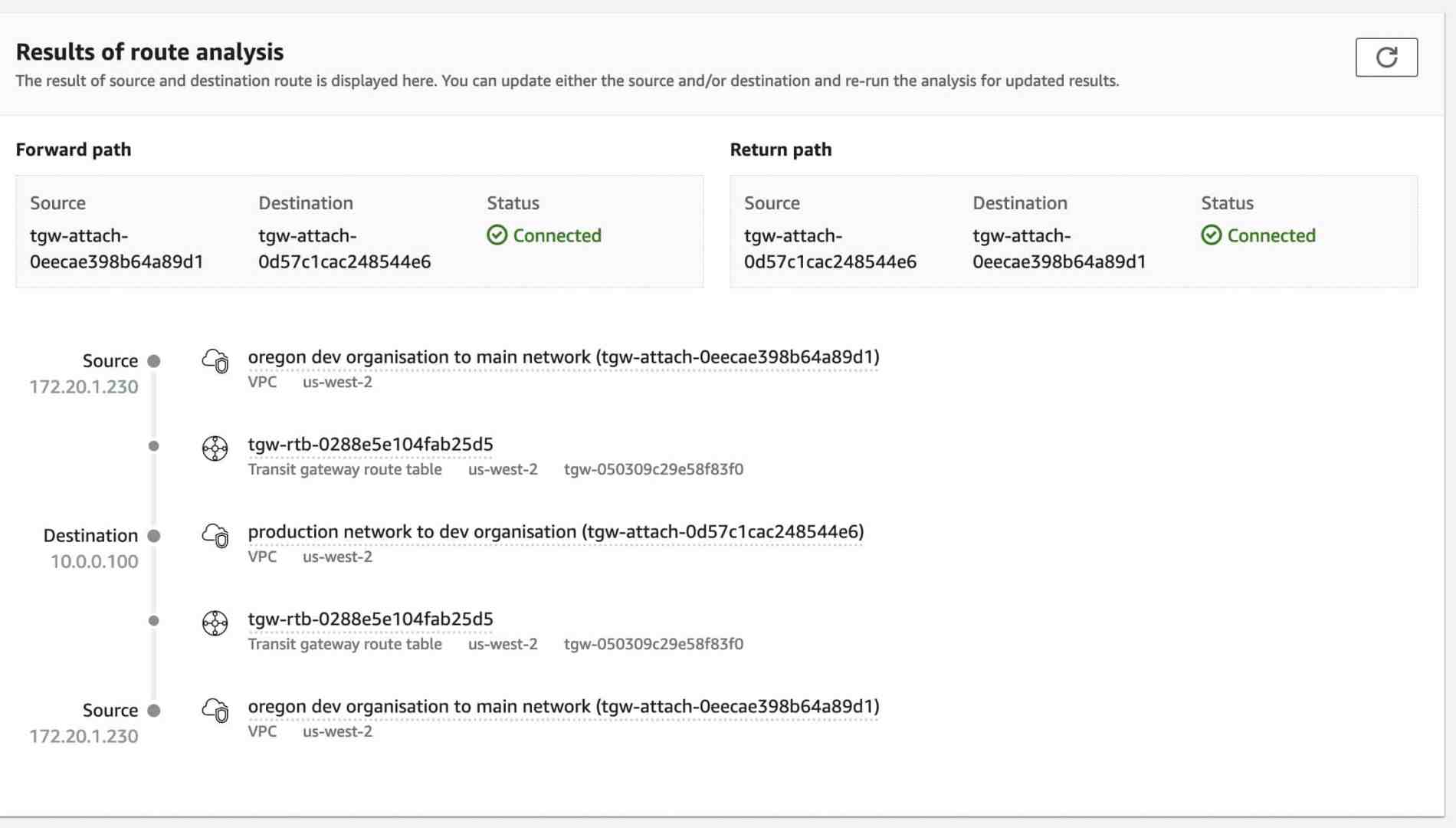

Think about it: It’s easy to create your own “private cloud” if you already have a data centre – either your own hardware as a private cloud that you can use like a public cloud – e.g. your “core” artist machines that always need to be running, and only during peak productions do you expand to the public cloud providers – with the ability to connect individual artists or departments at any time. I’ve seen this being useful for episodic productions and TV series. And so we are currently working on the possibility of enabling multi-cloud pivoting, i.e. switching from one cloud provider to another.

This is linked to “hybrid deployments” – you can use your on-premise hardware as a private cloud – and when production requires it, connect it to the public clouds such as AWS or Azure. We work with other cloud providers specialising in VFX – soon we’ll be able to name them (laughs). This way, you have your “baseline” and can ramp up or down as needed.

DP: And if I want to have this toolset for my own production?

Mihai Satmarean: Well, you would email me at contact@skylitetek.com – and we’ll find something interesting!