“Red Poppies” (original title: “Czerwone Maki”) is a 2024 Polish war drama directed by Krzysztof Łukaszewicz. The film portrays the legendary Battle of Monte Cassino through the eyes of Jędrek, a young man who endures the harrowing experiences of a Soviet gulag and later joins the Polish II Corps.

The cinematography is crafted by Arkadiusz Tomiak, with Marek Warszewski serving as the art director and Agnieszka Sobiecka designing the costumes. The screenplay is also penned by director Krzysztof Łukaszewicz.

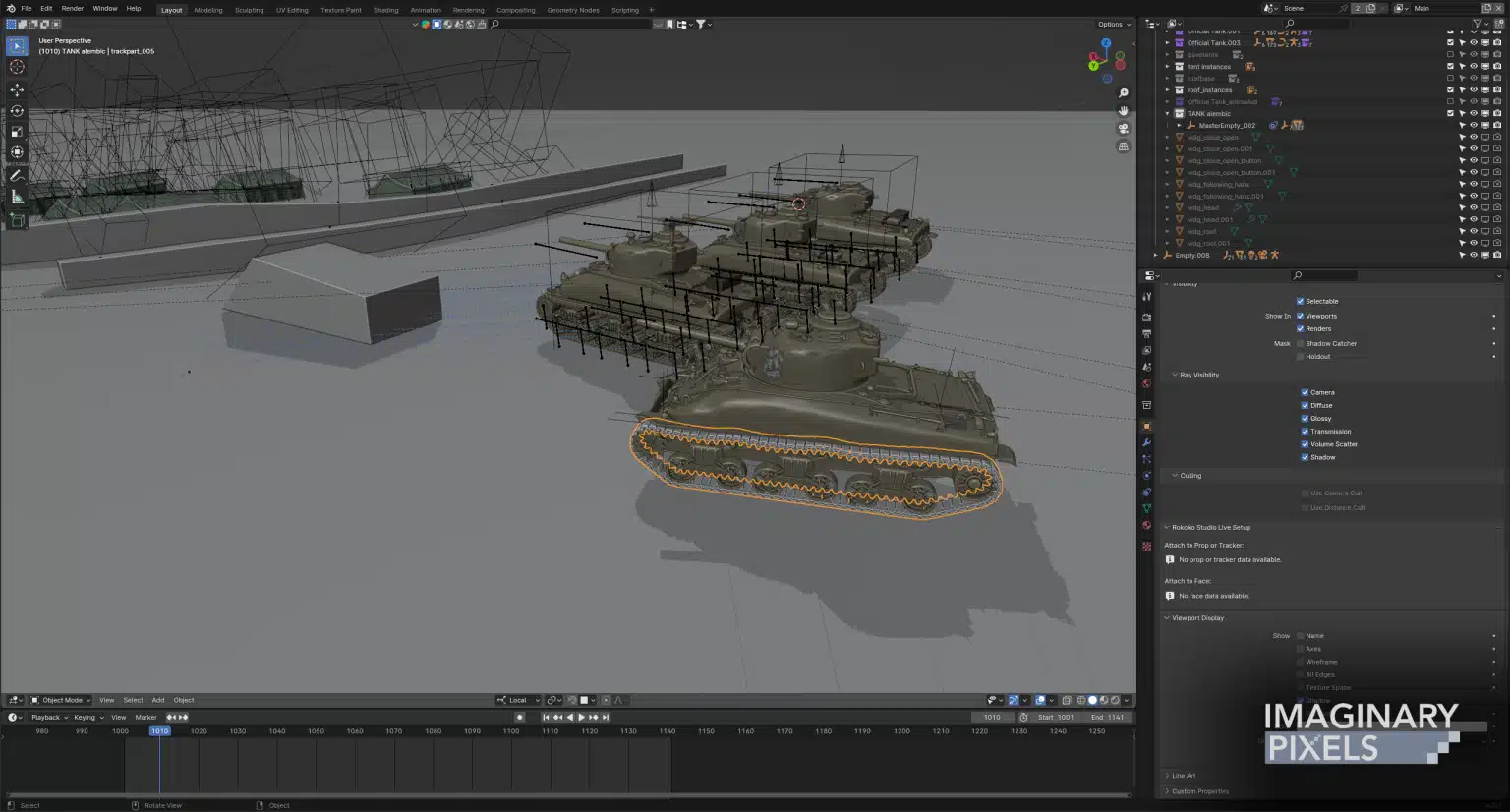

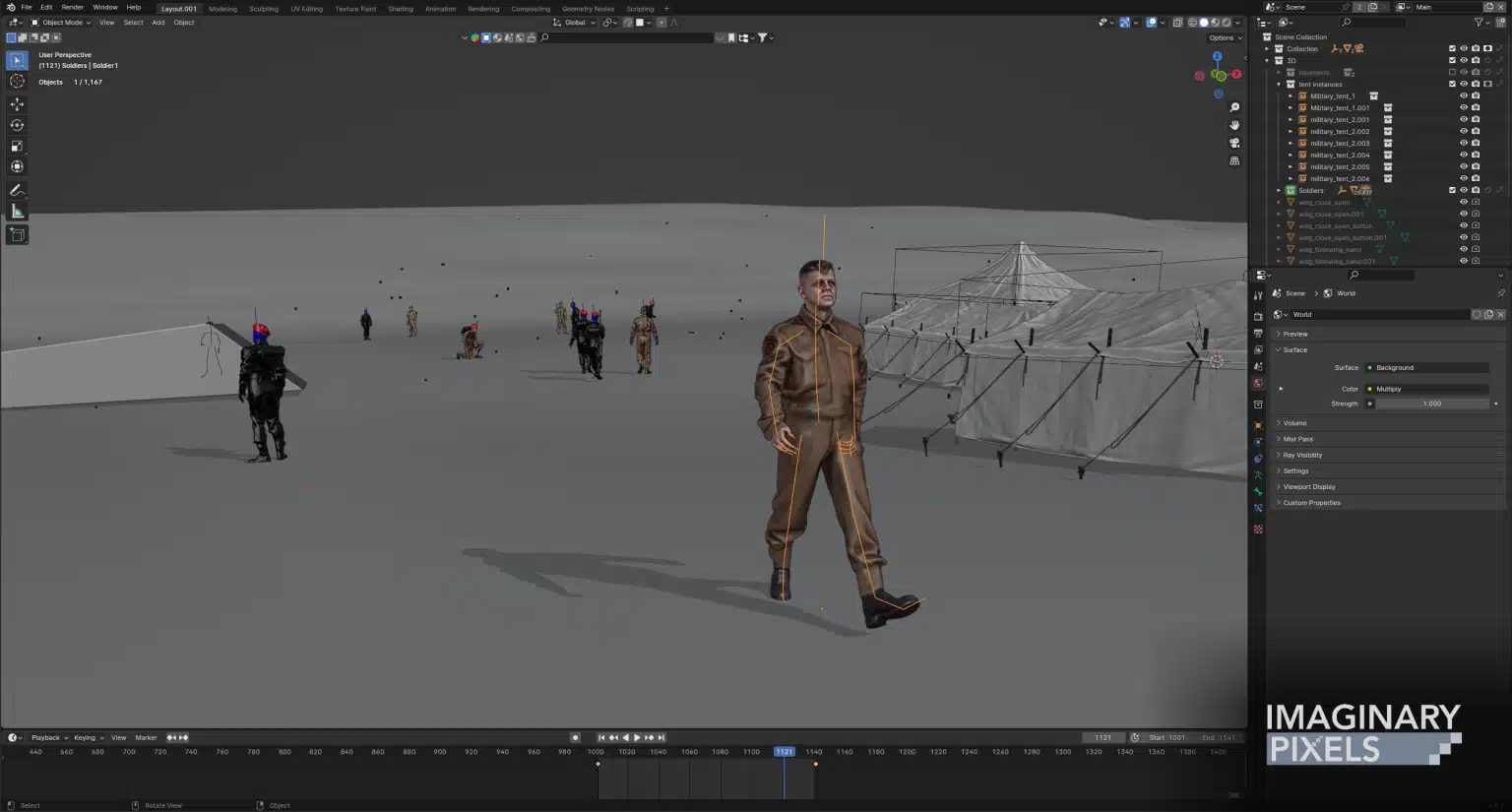

Produced by Wytwórnia Filmów Dokumentalnych i Fabularnych (WFDiF), “Red Poppies” is co-produced by ORKA, a company that expressed pride in contributing to the first Polish feature film about the Battle of Monte Cassino. The film’s visual effects were handled by Imaginary Pixels, a Polish VFX studio responsible for over 120 shots involving 3D tanks, set extensions, and the digital recreation of Wojtek the bear, a historical figure known for aiding Polish soldiers during World War II. https://kinoswiat.pl/film/czerwone-maki

The VFX team for “Red Poppies” included:

Maciek Wojtkiewicz – Senior Animator

Lukas Remis – VFX Supervisor

Artem Huza, Kajetan Czarnecki, Trendafil Kirilov, Jorge Grandes, Siarhei Talashka – Compositing Artists

Severi Kamppi – 3D Lead

Jyri Kataja – 3D Generalist

Patryk Ptasiński – FX Artist

DP: Let’s start with Red Poppies: How did you get involved with that production?

Lukas Remis: Red Poppies was a Polish production with one of the biggest budgets in recent years. It’s a war movie with over 400 VFX shots. Imaginary Pixels was one of 3 vendors, and we were brought in by the production company, that we have worked with in the past. For this project we’ve delivered over 120 shots, with a team of 12 artists, in about 4 months. Our work involved 3D set extensions, crowd and tanks multiplication, 3D creature creation and animation, and a lot of explosions and bullet hits.

The most complex part of the work was creating and animating a 3D version of Wojtek the bear. Wojtek was a Syrian brown bear adopted by Polish soldiers during World War II. There were about 15 shots with the creature, and many of them included interaction with the actors. Blender was our main and only tool for that. All aspects, from modelling, rigging, grooming, to animation and rendering were done in Blender. Our rig had some elements that were calculated automatically, like skin around the mouth or the ears. The goal was to have more physically accurate movement and faster workflow.

Another large part of our work were set extensions – usually mountains in the midground or background. For the background mountains, we have created a 360-degree environment in Blender, matching the terrain and foliage of Monte Casino in Italy, where the action should take place. We’ve rendered that as a 22K equirectangular image (Nuke wasn’t able to handle a larger render with AOVs) with some passes and different light direction versions. That way, the compositing team was able to use it as projections, for any angle in multiple shots, without involving the 3D artists each time.

DP: Can you break down your postproduction pipeline—from asset creation to final render—and specify which tools you used at each step?

Lukas Remis: Our pipeline is based on Ubuntu Linux. Projects are managed using Kitsu – an open source production management and review platform. We’re using Blender exclusively for all 3D tasks, and it’s native renderer – Cycles – for rendering. Render management is done with CGRU Afanasy. Compositing in Nuke. We’ve used Houdini in the past for FX, but currently we’re using mostly Embergen. Additionally we’re using Krita for photo or textures editing and MRV2 for playback and review. We’re also using Davinci Resolve for editing.

DP: Why did Imaginary Pixels as a full, working VFX shift toward open-source tools? Was this a gradual evolution or a clear strategic decision?

Lukas Remis: Personally, I have always been interested in the open source community and software, long before starting a career in VFX. I’ve been testing and playing with different Linux distributions in the past. Since a couple of years Linux, and Ubuntu as my personal favourite, has surpassed Windows functionality and stability, so it was a natural choice for an OS for all our machines. As for the other tools, like Blender, Kitsu or MRV2, the financial aspect is of course very important, but we only adopt an open source solution if it’s really capable of delivering what we need, efficiently. Blender was there from the beginning, the other tools came gradually, while we were looking for solutions to different areas.

Kitsu, the project management platform, is definitely worth mentioning here. It’s a wonderful software, with great people behind it. It was used, together with Blender, for this year’s Oscar winner: Flow.

DP: Are there areas in your pipeline where proprietary tools remain essential? What can’t be replaced by open-source alternatives—yet?

Lukas Remis: Obviously, in compositing we are stuck with proprietary software – and Nuke as the industry standard. And even the alternatives are not open source. Recently, I’ve been reaching out to several VFX studios to build a Natron development group, because I believe this software could take over a large part of Nuke compositing if the development would be revived. Natron’s similarity to Nuke makes it a great candidate for a compositing software alternative. Almost all the studios that I talked to expressed an interest in this initiative, and I have also talked to a “large” open source organization that would be interested in taking over Natron development – if appropriate funding is provided of course. I think a “Blender-like” development fund, with some VFX companies behind it, could provide the necessary funds for this.

FX software is another proprietary area. Houdini is fantastic software, but for a small studio like us, it’s expensive, especially that simulations are not our daily tasks. That’s why we’ve started working with Embergen. It’s still not close to the level of Houdini, but it successfully manages many of our tasks. And with the announced updates it will be even more useful.

DP: How do you manage bugs or tool limitations during production? Do you patch internally, rely on community support, or contract developers?

Lukas Remis: We don’t have inhouse developers, so we must rely on the development team behind each software. This can be difficult – for example I’ve reported a Blender bug over 7 years ago – it was about Blender not importing focal length animation of cameras in Alembic format – and it’s still not fixed! I can imagine this is a relatively simple fix, but the bug is a pretty big problem. On Red Poppies we also had some issues with the bear’s fur and alembic caches, but we just found some workarounds. But bugs are a thing, no matter if it’s open source or proprietary software. I have reached out to Nuke’s support several times without getting a solution to a problem. For example with the license server not working properly and they were unable to provide a solution. I can understand a bug in a free software, but certainly not in a crazy-expensive software like Nuke.

On the other hand, I had an amazing experience with the developers behind Kitsu and MRV2. They are very responsive and fast. I was especially blown away with Gonzalo Garramuño, the creator of MRV2. A couple of times he reacted within minutes from posting a bug, and provided a fixed version of the software. I think the open source developers and community are in general more approachable.

DP: What’s your perspective on open file formats like USD, OpenEXR, Alembic, or OpenTimelineIO? Do they streamline your pipeline, or cause compatibility challenges?

Lukas Remis: Those are a “must”. OpenEXR and Alembic are standard daily-used formats, even to exchange data between different software internally. As for USD, we’ve used it on several occasions, where we needed to exchange 3D scenes with other studios – for example on “Return to Silent Hill”, for which we’ve been mostly building environments, using Blender.

DP: How much custom development went into making your pipeline as a whole work? Are you using off-the-shelf pipeline tools or building your own solutions?

Lukas Remis: Kitsu is great as a management and review platform, but it lacks software integration. So I’ve developed a program, which connects with Kitsu database, using it’s API, and at the same time it manages all our project and media files. It allows us to load specific project versions to DCC software, and submit output for review directly to Kitsu. This was created with Python. I know several programming languages, but I’ve never done anything in Python, so I wrote the software with chatGPT.

Also I’ve decided to have Kitsu installed on our own internal server, which makes it free. But you can also choose Kitsu’s hosting and management, with some monthly fee, if you want to avoid any technical work.

DP: Open source reduces licensing costs – and no licence server means an easy cloud deployment – are you using tools on Cloud or Hybrid infrastructure?

Lukas Remis: At the moment, considering we’re a small studio, I feel more comfortable with on-premise hardware. Especially that it’s hard to calculate the actual costs of cloud solutions – it’s more like estimations. I prefer to invest in our workstations or local render farm, because it’s going to be here and waiting for another project even during a down time. And our workstations are prepared for freelancers to work remotely. But I’ve talked with Mihai Satmarean from skylitetek.com about the possibilities of implementing their cloud solutions, and this will definitely be a thing if we grow bigger or need a quick ramp up. Skylitetek expertise is really valuable because they have experience working with VFX studios and their pipelines, not just delivering some generic cloud solutions.

In the meantime their team has helped us with some network and user management configuration. We don’t have any of the DCC software installed directly on the workstations. We’re using a “central” NAS which is hosting it for all our users. This was a thing I’ve implemented a long time ago, but now we needed to make it more advanced and add an LDAP user management.

DP: How reliant are you on cloud infrastructure? Are you using it for rendering, collaboration, asset management—or all of the above?

Lukas Remis: I’ve used some cloud/online rendering solutions in the past, but currently we’re fully relying on our hardware. Remote artists can connect with our VPN and “stream” a workstation, and they have access to all project data.

DP: How do you find and train artists comfortable with open-source tools like Blender or Gaffer? Is the talent pool growing—or do you invest heavily in onboarding?

Lukas Remis: At the moment Blender is our only open-source app that requires artists to know it. We have amazing Blender generalists, and the software is so popular that it’s not that hard to find new talent. For Red Poppies we needed a senior animator for some of the bear shots, and I’ve reached out to a friend of mine, who’s a Maya animator. He was willing to try animating in Blender, and all he needed was some Youtube tutorial about Blender basics, and introduction to our pipeline. After getting familiar with the interface he said he doesn’t see much difference for the animation process.

DP: Is Imaginary Pixels contributing to the open-source projects you use—via code, bug fixes, or documentation?

Lukas Remis: As mentioned we don’t have developers in our team, so we can only report bugs. But of course we are financially supporting Blender, Krita or Kitsu. Kitsu also has a nice system for recommending new features, so we can suggest changes based on real-world cases.

DP: Are you planning to expand your open-source pipeline for future projects? Any new workflows—real-time rendering, AI, virtual production—on the horizon?

Lukas Remis: Of course like everyone we’re thinking about AI and it’s possible applications. However, so far, most of the generative tools that I’ve tested are nowhere near being production ready. The main issue is complete randomness and lack of output control. But the media are completely hyped with AI, presenting it as the downfall of VFX and Hollywood in general 🙂

We’re using some AI tools integrated with Nuke, and planning to implement some generative AI with ComfyUI.

DP: What are your criteria when evaluating a new open-source tool for adoption? How do you assess codebase health, community size, or long-term viability?

Lukas Remis: The easiest way is to check the software’s GitHub page, and see how many people are contributing to the code and how often. That’s something you never know when using commercial closed-source software.

DP: Open-source projects can be abandoned. How do you hedge against that risk?

Lukas Remis: Yes, there’s always a risk that an open-source project can be abandoned. However, as we have recently seen with Foundry’s Modo, you’re not getting any guarantees with commercial software as well. Modo’s users have not only been abandoned, but also their licences are going to be cancelled. So they won’t be able to even use the old versions. With open-source, the code is still there, even if it’s abandoned. And you can continue developing it by yourself.

DP: How has the shift to open-source tools changed your studio culture—especially in terms of collaboration, experimentation, and problem-solving?

Lukas Remis: I think using open source software encourages everyone to experiment. There is no “correct way” of doing things, you should always search for new possibilities and stay creative. Our artists often send me links to some new, interesting tools they found, so we can consider if that would be useful for us.

DP: Would you say your current pipeline is fully production-ready—or still evolving? What advice would you give to other studios considering a similar move?

Lukas Remis: Yes, it is production ready. We’re using it on all our projects. Of course, it’s still evolving, as things that work for us now, might not work when we grow bigger or when we meet different tasks. But the beauty of open-source software is that you can try it for free. If it turns out that a tool is not meeting your requirements, you can just move on, or perhaps help to develop the tool. With commercial software, usually when you invest large amounts of money in some solutions, it might not be possible to just move to something else. Obviously, it’s not possible to be completely open-source when you want to make professional VFX, but there’s a lot of great free software out there.

DP: What good resources are out there to learn more about it?

Lukas Remis: For Blender, your best source of information will be YouTube and Stack Exchange. And for general information about open source VFX tools, I recommend this page:github.com/cgwire/awesome-cg-vfx-pipeline – it’s an extensive list of different projects, covering any aspect of a VFX pipeline.

DP: What is the next project you are working on (insofar as you can talk about it)?

Lukas Remis: At the moment we’re working on some Polish projects: a TV series and a feature film: Vinci 2. At the beginning of this year, we worked on several commercials for the Super Bowl, and before that we were focused on Return to Silent Hill, which should have a premiere at the end of this year.