Jules Neghnagh Chenavas has released GodotARKit, an open-source plug-in that enables real-time facial motion capture inside Godot 4. The plug-in streams facial tracking data from Apple’s ARKit via UDP and is designed to work directly with Apple’s Live Link Face mobile app.

The system uses ARKit’s full set of facial blendshapes (52 standard expressions plus head and eye rotations) and feeds them straight into Godot, eliminating the need for intermediate DCC software. The data is handled in-game, which makes the plug-in suitable for live performance capture, real-time animation previews, or in-engine cutscenes.

Live Link Face Integration

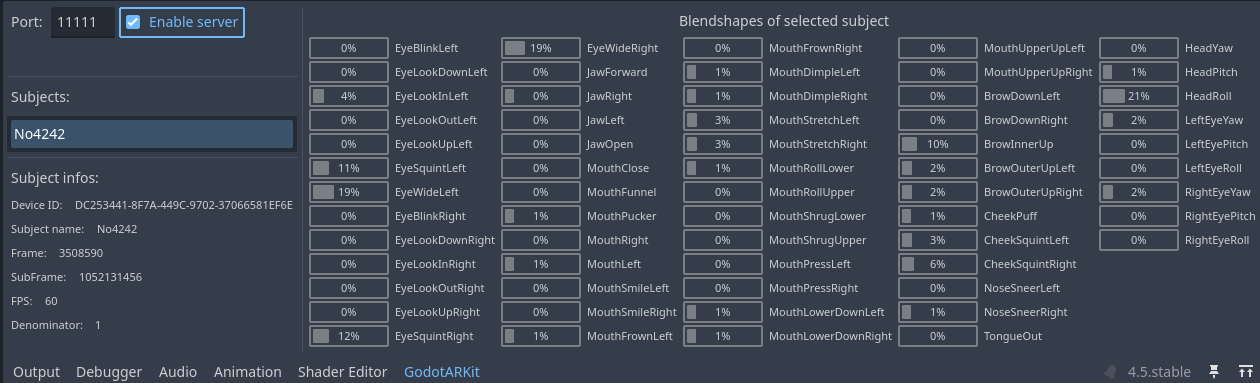

According to the README, users set up Live Link Face to send data to their local network’s IP and port, which GodotARKit then receives and visualises through an integrated editor panel. The interface displays connected devices, current blendshape values, packet frame info, and error messages in real time. The plug-in acts as an autoload singleton, meaning all blendshape data are accessible globally from scripts. The developer provides a working demo script in the repository that shows how to map blendshapes and head rotations to a character rig in a few lines of GDScript.

Under the Hood

The plug-in architecture includes a UDP server, packet decoder, and ARKitSubject class for device management. Each connected iOS device becomes a “subject” broadcasting ARKit blendshape frames. Users can monitor connection status and debug data within Godot’s bottom panel without leaving the editor. A weak reference and signal-based communication structure prevents memory leaks and redundant object references, a common issue in real-time networked systems. The developer explicitly mentions using this method to avoid referencing the ARKitSingleton directly.

Example Implementation

The example provided shows how to animate a MeshInstance3D by looping through blendshapes in the incoming data packet. Head and eye rotations are handled separately, with blendshape values converted into rotation quaternions for neck and eye bones. The demo also includes a note that both eyes currently share identical rotation behaviour—a known limitation marked for future refinement.

Licensing and Contributions

GodotARKit is distributed under the MIT license, meaning both commercial and non-commercial use are permitted. The developer encourages community contributions and openly admits limited testing with multiple devices and UI elements. As of the latest commit (last week), the plug-in is at version 1.0 and has updated documentation and icons. The repository shows regular activity and version control discipline, indicating ongoing maintenance.

Compatibility

The plug-in targets Godot 4 and uses standard UDP communication, so it does not require proprietary middleware or additional network libraries. Compatibility with ARKit implies it will function with any iPhone or iPad supporting Apple’s facial tracking API, though the author does not specify minimum device requirements.

Summary

For Godot developers seeking a real-time facial capture solution without switching to Unreal or Unity, GodotARKit provides a minimal and open-source option. It leverages existing ARKit infrastructure and Apple’s Live Link Face app to feed high-fidelity facial data into Godot with minimal setup. As with any new open-source tool, users should test stability and latency performance before deploying it in production environments.