Well, the documentary „Our universe“ has a bigger scope than the west of England, but the VFX House Lux Aeterna is based there – and we got a chance to chat to VFX Supervisor Paul Silcox!

Lux Aeterna is quickly becoming one of the première scientific-documentary-houses in the UK, working with the BBC and Netflix on shows like “Brian Cox’s Horizons”, First Contact, Blue Whales (Watch out for that in our coming issues!) and of course “Our Universe”. Narrated by Morgan Freeman, the Netflix Documentary series by Showrunner Mike Davies, produced by the BBC Science Production Company and stretching six episodes with roughly 45 Minutes each is a optical extravagancy, connecting recognizable earthly creatures with “all the stuff out there” and telling the story of how this all comes together – highly recommended!

DP: To get a sense of the scope of your project, how many full CG shots did you create for the six episodes? Was there anything you didn‘t simulate?

Paul Silcox: Hi, yes, we did visualise a lot of phenomena but not everything was simulated, where possible we leaned on proceduralism in Houdini to produce the variety of FX. In the end there were nearly 400 full CG shots in the show and nearly 700 shots in total, these were produced over an 18-month period.

DP: How big was the team for this show, and what was your “primary” pipeline?

Paul Silcox: The team for Our universe varied over the production but was generally between 20 and 30. We had a very senior team working FX, Lighting and comp so we were able to deliver beautiful shots efficiently. In order to handle the sheer amount of active shots and the massive amounts of data utilised in the show we boosted our IT infrastructure adding 70 High-end render nodes, high bandwidth network hubs and super fast SSD storage. Managing all of this is our bespoke Shotgrid and Deadline management software.

The core of the studio‘s creative output is based around Houdini, Maya and Nuke and this is managed through Shotgrid, though we still work in C4d and After Effects for certain shots or projects. Our renderers of choice

for Our Universe were Arnold, Mantra and Redshift.

DP: Did you use different toolsets for the different shots?

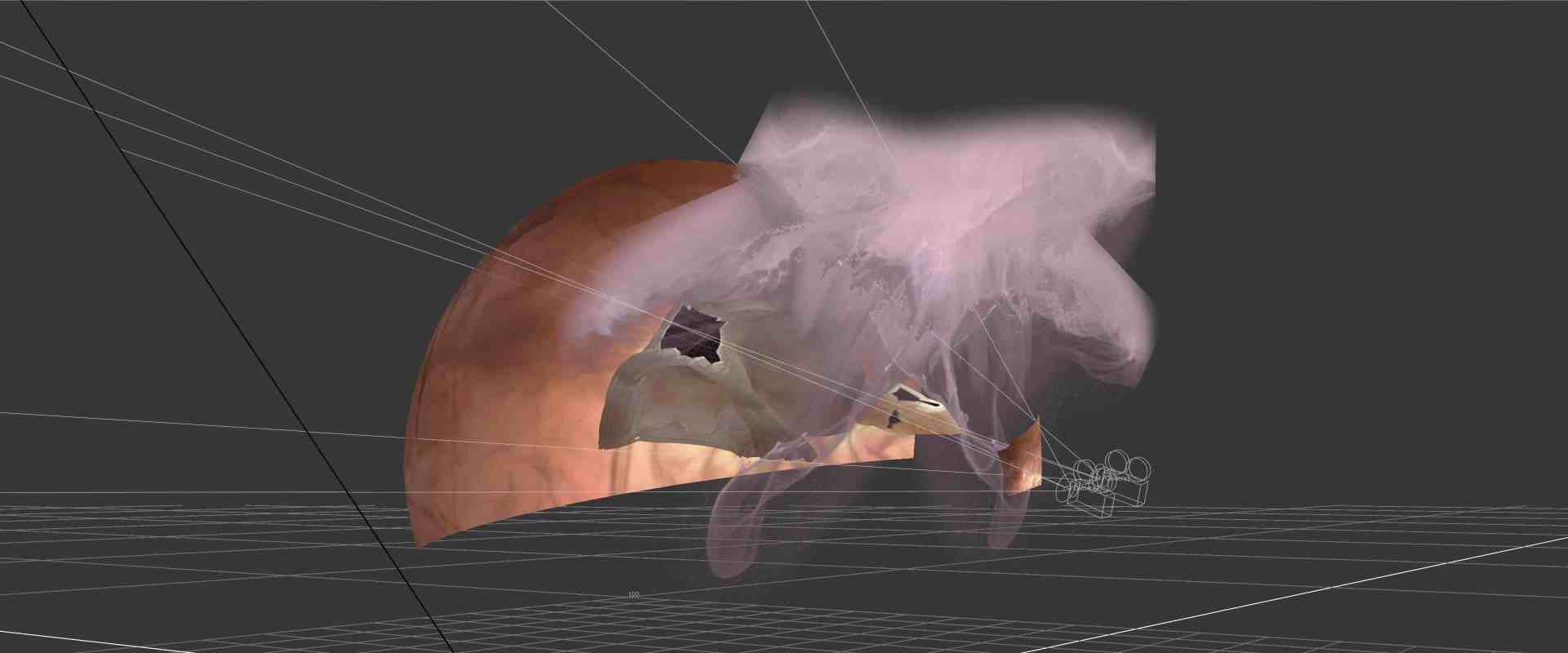

Paul Silcox: Houdini was our go to creative package for a lot of phenomena and this was supported by Maya and Nuke. The power of Houdini to test ideas and play with concepts allowed us to communicate scientific stories in a variety of new and interesting ways.

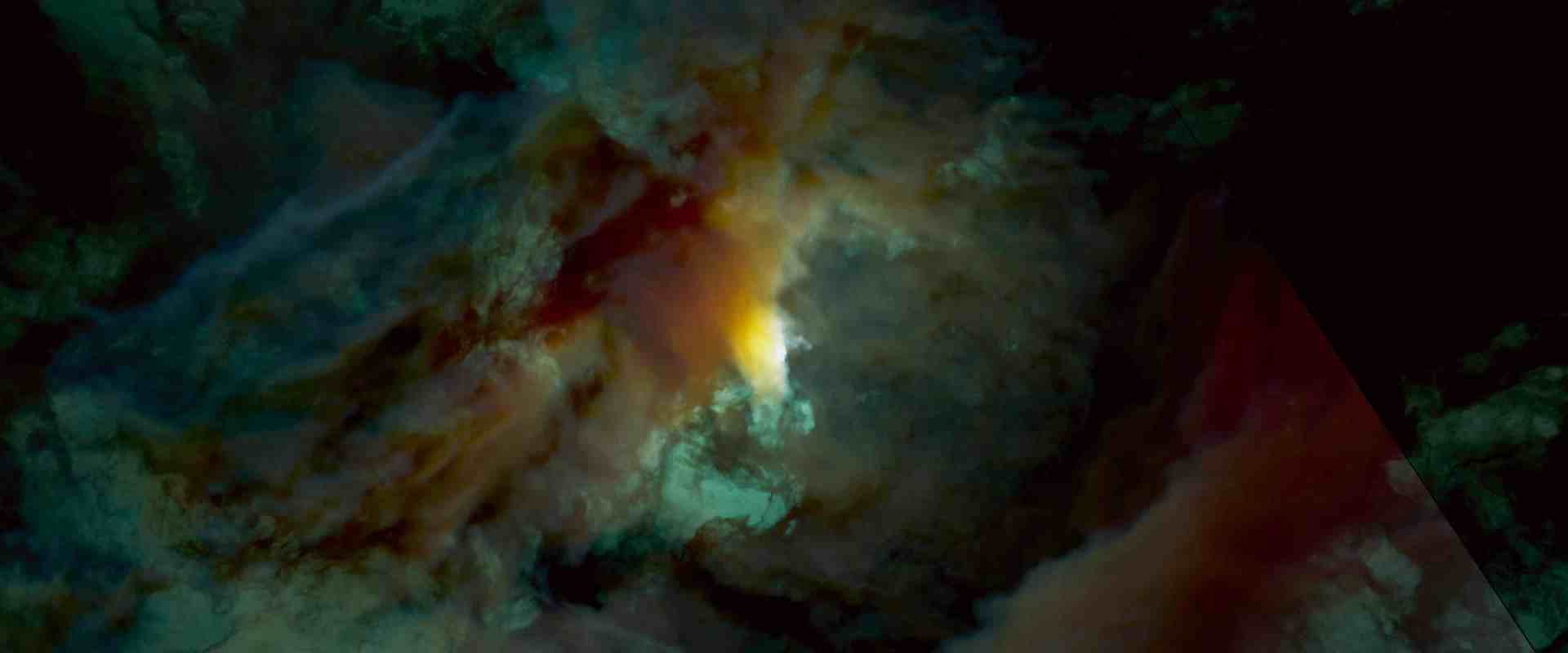

Our Universe was a dream job as there were so many examples of phenomena that we had to synthesize, from the inside of the cell to the inside of the sun there was always a new creative challenge. The atomic sequences are a great example of the type of collaboration that marked the production – our CG Supervisor created a beautiful sequence visualising the interaction of atomic particles and the FX department was tasked with developing a system that could replicate the look across a variety of shots. The team developed a procedural SDF system in Houdini that was relatively light-weight and art directable and this was used to demonstrate the many atomic interactions that drive the universe.

DP: Did you add any special tools to the Houdini pipeline?

Paul Silcox: Houdini was our primary FX software though there were other packages used for simulation FX. For one sequence we handled a massive SPH dataset computed in SWIFT at Durham University on the Cosma supercomputer. The data arrived in HDF5 format and was converted to a usable format for visualisation in Houdini. We also use MASH in Maya, particles in nuke and we use After Effects with Element 3D.

DP: And then you added particles to everything?

Paul Silcox: Lux Aeterna‘s Comp team did a brilliant job of working with Houdini and Maya environments, simulations and FX and they are always adding a sprinkle of something to a shot, from volumetric lighting to new particles. Our 3D teams worked closely with comp to make sure they had everything necessary in the renders from an AOV point of view and all shots were lit and rendered in ACES colour space to the required delivery spec.

DP: How did you keep an overview of these many, sometimes rather similar shots?

Paul Silcox: Lux Aeterna used Shotgrid to manage the shot delivery and our workflows allowed for there to be a significant number of shots to be live and worked on concurrently. Production was brilliant at managing the reviews through the playlists on shotgrid and for the artists this worked very well. You‘d think that it would be easy to get confused when the differences between shots are subtle but it‘s like the penguins finding their young, once you have worked on a shot you can find it and talk about it instantly no matter how many similar versions you have worked on.

DP: Did you have to develop something from scratch for “Our Universe”?

Paul Silcox: Our Universe presented many challenges and Lux Aeterna responded in a variety of ways developing numerous new workflows, one example was our collaboration with Amazon AWS. We had used online render farms many times before but we had never needed so many hours of rendering in such a short delivery window. We worked closely with the UK and US teams to implement the AWS software and learn the intricacies of working with datacenter infrastructure to add several hundred nodes to our render capacity at peak times.

DP: And speaking of delivery, how long did you (approximately) render per frame?

Paul Silcox: Ha, how long is a piece of string? Let me just say this, there were a significant number of shots with super complex volumes, billions of voxels instanced many times, SSS shots with full frame transmission and scenes with many millions of polygons, all rendered at 4K. A lot of these shots would take 5 hours plus per frame to render all layers so the demand on the render farm was huge.

DP: In comparison to other shows like Blue Whales or 8 Days, what were the difficulties in the pipeline?

Paul Silcox: Our Universe was complex and wide ranging in its scope but the demands are always the same, more power, more storage and more bandwidth. Lux Aeterna has developed a pipeline to handle the complexities of a show like Our Universe and I was proud to see it delivered so efficiently.

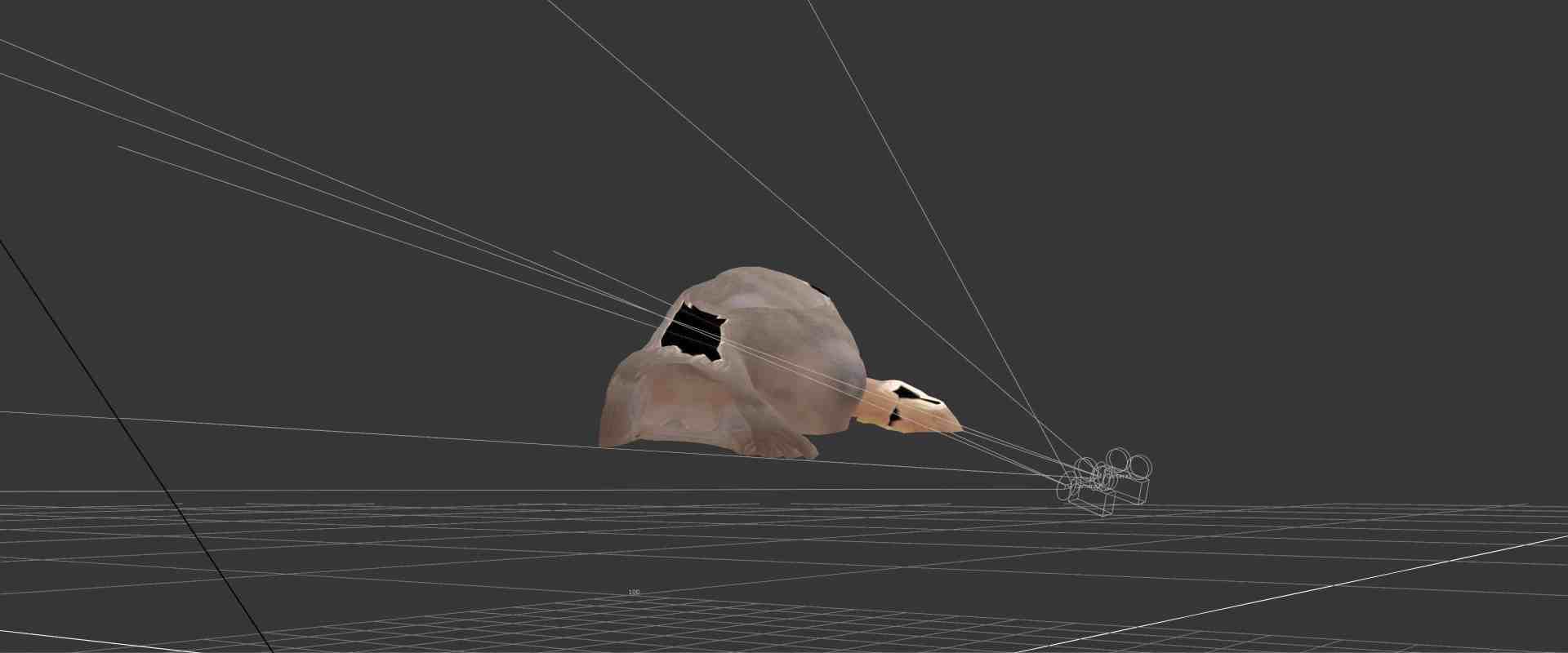

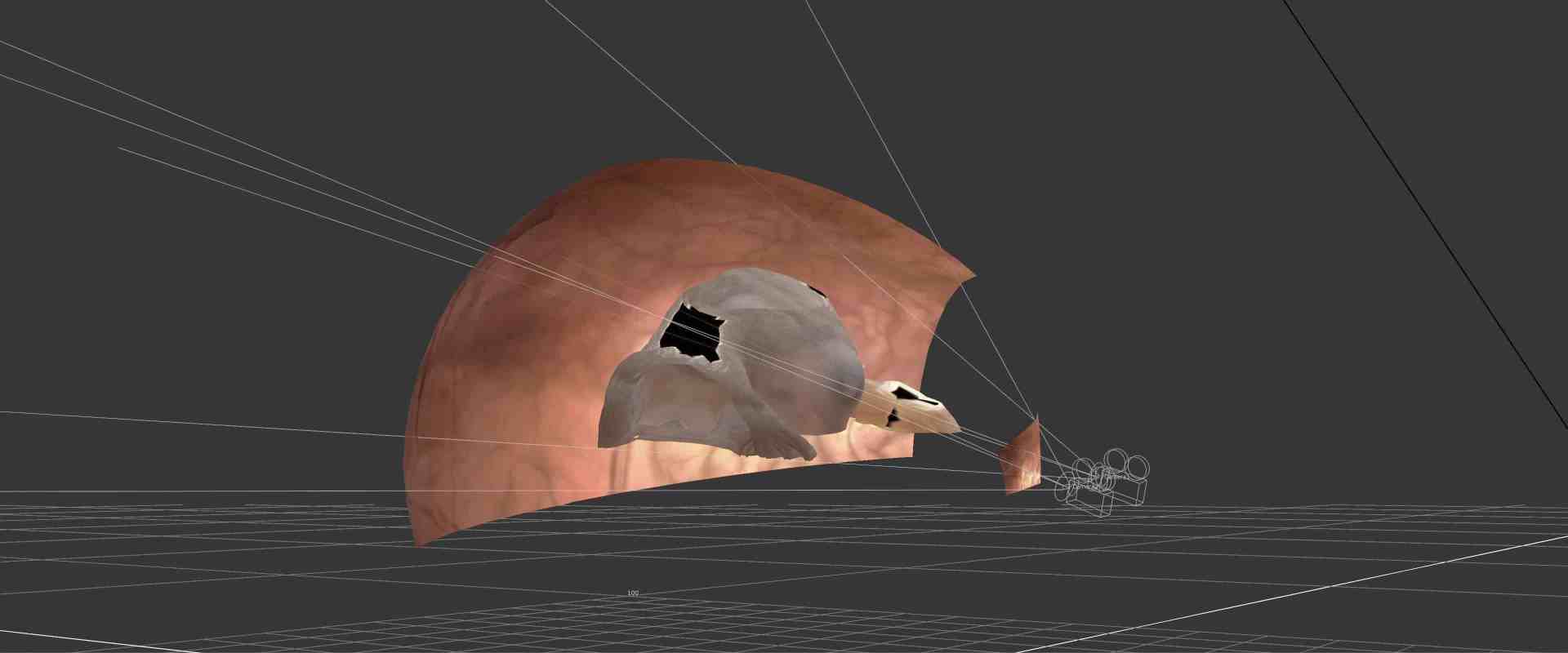

DP: There were “pickup” and “inbetween shots” in almost every jump from documentary footage, animals, and the space-pictures – did you have a library for that, or did you create a shot for each?

Paul Silcox: Our artists worked particularly hard on the interstitials creating the timelapse and spatial jump effect that bridged the many sequences. We didn‘t rely on anything except the creative flair and extreme hard work by our dedicated team.

DP: I assume that some shots had “invisible Vfx” as well?

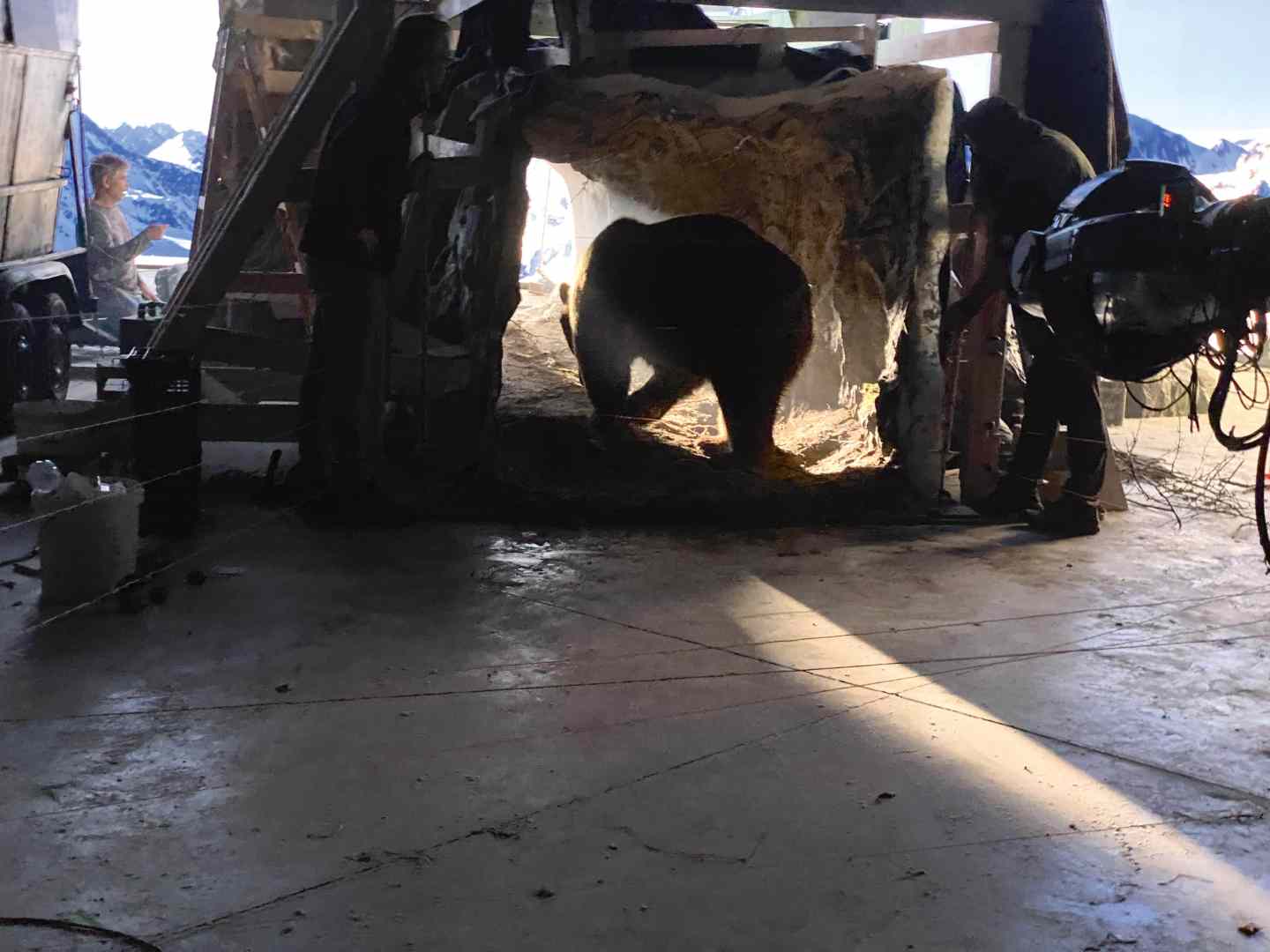

Paul Silcox: There was definitely some cleanup and augmentation as this is always the case for this type of show. Our comp team worked brilliantly to create the continuity in the show though you‘ll have to look hard to find it!

DP: How did you balance between the “Look” and the input from the scientific advisors?

Paul Silcox: Finding the balance between science and entertainment is always a journey, luckily we have experienced and senior creatives with a long history of scientific storytelling having worked on BBC Science shows like ‚Wonders of the Universe‘, ‚Wonders of Life‘ and ‚Human Universe‘, just to name a few. They brought this considerable experience to the show working closely with the showrunner Mike Davies and the brilliant Producer Directors to deliver a cinematic vision and relatable stories to the screen.

DP: What would you do differently if you started at Episode One again?

Paul Silcox: VFX is a dynamic business and so we are always looking forward not back, having said that there are always efficiencies to be found. In an ideal world I would share ALL of our knowledge with our younger selves, even if all it did was bring the render times down.

DP: And what was your favourite shot?

Paul Silcox: Of course, as the supervisor I‘m not allowed to have favourite shots ;). I would say that the first episode was fun as we were right at the beginning of our journey and discovering a look for the programme, it was a really exciting time.

DP: What are you working on now?

Paul Silcox: We are always under such strict rules when it comes to discussing live projects but what I will tell you is that as well as our unscripted slate we are very happy to be working on more scripted productions and as always we are developing new ways to tell stories and continuing to challenge our skills in environment and FX.